49. Competitive Equilibria of a Model of Chang#

In addition to what’s in Anaconda, this lecture will need the following libraries:

!pip install polytope cvxopt

49.1. Overview#

This lecture describes how Chang [Chang, 1998] analyzed competitive equilibria and a best competitive equilibrium called a Ramsey plan.

He did this by

characterizing a competitive equilibrium recursively in a way also employed in the dynamic Stackelberg problems and Calvo model lectures to pose Stackelberg problems in linear economies, and then

appropriately adapting an argument of Abreu, Pearce, and Stachetti [Abreu et al., 1990] to describe key features of the set of competitive equilibria

Roberto Chang [Chang, 1998] chose a model of Calvo [Calvo, 1978] as a simple structure that conveys ideas that apply more broadly.

A textbook version of Chang’s model appears in chapter 25 of [Ljungqvist and Sargent, 2018].

This lecture and Credible Government Policies in Chang Model can be viewed as more sophisticated and complete treatments of the topics discussed in Ramsey plans, time inconsistency, sustainable plans.

Both this lecture and Credible Government Policies in Chang Model make extensive use of an idea to which we apply the nickname dynamic programming squared.

In dynamic programming squared problems there are typically two interrelated Bellman equations

A Bellman equation for a set of agents or followers with value or value function

A Bellman equation for a principal or Ramsey planner or Stackelberg leader with value or value function

We encountered problems with this structure in dynamic Stackelberg problems, optimal taxation with state-contingent debt, and other lectures.

We’ll start with some standard imports:

import numpy as np

import polytope

import matplotlib.pyplot as plt

49.1.1. The Setting#

First, we introduce some notation.

For a sequence of scalars

An infinitely lived

representative agent and an infinitely lived government exist at dates

The objects in play are

an initial quantity

a sequence of inverse money growth rates

a sequence of values of money

a sequence of real money holdings

a sequence of total tax collections

a sequence of per capita rates of consumption

a sequence of per capita incomes

A benevolent government chooses sequences

Given tax collection and price of money sequences, a representative household chooses

sequences

In competitive equilibrium, the price of money sequence

Chang adopts a version of a model that [Calvo, 1978] designed to exhibit time-inconsistency of a Ramsey policy in a simple and transparent setting.

By influencing the representative household’s expectations, government actions at

time

When setting a path for monetary expansion rates, the government takes into account how the household’s anticipations of the government’s future actions affect the household’s current decisions.

The ultimate source of time inconsistency is that a

time

49.2. Decisions#

49.2.1. The Household’s Problem#

A representative household faces a nonnegative value of money sequence

Facing vector

subject to

and

Here

Chang [Chang, 1998] assumes that

there is a finite level

The household carries real balances out of a period equal to

Inequality (49.2) is the household’s time

It tells how real balances

Equation (49.3) imposes an exogenous upper bound

49.2.2. Government#

The government chooses a sequence of inverse money growth rates with

time

The government purchases no goods.

It taxes only to acquire paper currency that it will withdraw from circulation (e.g., by burning it).

Let

Evidently, the value of paper currency meassured in units of the consumption good at time

The government faces a sequence of budget constraints with time

where

Evidently, this budget constraint can be rewritten as

which by using the definitions of

The restrictions

We define the set

To represent the idea that taxes are distorting, Chang makes the following assumption about outcomes for per capita output:

where

Example parameterizations

In some of our Python code deployed later in this lecture, we’ll assume the following functional forms:

The tax distortion function

Calvo’s and Chang’s purpose is not to model the causes of tax distortions in

any detail but simply to summarize

the outcome of those distortions via the function

A key part of the specification is that tax distortions are increasing in the absolute value of tax revenues.

Ramsey plan: A Ramsey plan is a competitive equilibrium that maximizes (49.1).

Within-period timing of decisions is as follows:

first, the government chooses

then given

then output

finally

This within-period timing confronts the government with

choices framed by how the private sector wants to respond when the

government takes time

This consideration will be important in lecture credible government policies when we study credible government policies.

The model is designed to focus on the intertemporal trade-offs between the welfare benefits of deflation and the welfare costs associated with the high tax collections required to retire money at a rate that delivers deflation.

A benevolent time

To promote the welfare increasing effects of high real balances, the government wants to induce gradual deflation.

49.2.3. Household’s Problem#

Given

First-order conditions with respect to

The last equation expresses Karush-Kuhn-Tucker complementary slackness conditions (see here).

These insist that the inequality is an equality at an interior solution for

Using

Define the following key variable

This is real money balances at time

From the standpoint of the household at time

By “intermediates” we mean that the future paths

The observation that the one dimensional promised marginal utility of real

balances

A closely related observation pervaded the analysis of Stackelberg plans in lecture dynamic Stackelberg problems.

49.3. Competitive Equilibrium#

Definition:

A government policy is a pair of sequences

A price system is a nonnegative value of money sequence

An allocation is a triple of nonnegative sequences

It is required that time

Definition:

Given

The government budget constraint is satisfied.

Given

49.4. Inventory of Objects in Play#

Chang constructs the following objects

A set

Let

Chang exploits the fact that a competitive equilibrium consists of a first period outcome

Competitive equilibria that have a recursive representation

A competitive equilibrium with a recursive representation consists of an initial

A competitive equilibrium can be represented recursively by iterating on

(49.8)#starting from

The range and domain of

A recursive representation of a Ramsey plan

A recursive representation of a Ramsey plan is a recursive competitive equilibrium

The Ramsey planner chooses

Iterations on the function

At time

A characterization of time-inconsistency of a Ramsey plan

Imagine that after a ‘revolution’ at time

This new planner would want to reset the

The incentive to reinitialize

By resetting

49.5. Analysis#

A competitive equilibrium is a triple of sequences

Chang works with a set of competitive equilibria defined as follows.

Definition:

Chang establishes that

Chang makes the following key observation that combines ideas of Abreu, Pearce, and Stacchetti [Abreu et al., 1990] with insights of Kydland and Prescott [Kydland and Prescott, 1980].

Proposition: The continuation of a competitive equilibrium is a competitive equilibrium.

That is,

(Lecture dynamic Stackelberg problems also used a version of this insight)

We can now state that a Ramsey problem is to

subject to restrictions (49.2), (49.3), and (49.6).

Evidently, associated with any competitive equilibrium

To bring out a recursive structure inherent in the Ramsey problem, Chang defines the set

Equation (49.6) inherits from the household’s Euler equation for

money holdings the property that the value of

This dependence is captured in the definition above by making

Chang establishes that

Next Chang advances:

Definition:

Thus,

If we knew the sets

Find the indirect value function

Compute the value of the Ramsey outcome by solving

Thus, Chang states the following

Proposition:

where maximization is subject to

and

and

and

Before we use this proposition to recover a recursive representation of the

Ramsey plan, note that the proposition relies on knowing the set

To find

We want an operator that maps a continuation

Chang lets

Elements of the set

Chang defines an operator

such that (49.11), (49.12), and (49.13) hold.

Thus,

Proposition:

The proposition characterizes

It is easy to establish that

This property allows Chang to compute

49.5.1. Some Useful Notation#

Let

A government strategy

Chang restricts the government’s choice of strategies to the following space:

In words,

Chang observes that

Definition:

Admissibility of

After any history

In words,

Remark:

Definition:

An allocation rule is a sequence of functions

Thus, the time

Definition: Given an admissible government strategy

49.5.2. Another Operator#

At this point it is convenient to introduce another operator that can be used to compute a Ramsey plan.

For computing a Ramsey plan, this operator is wasteful because it works with a state vector that is bigger than necessary.

We introduce this operator because it helps to prepare

the way for Chang’s operator called

It is also useful because a fixed point of the operator to

be defined here provides a good guess for an initial set

from which to initiate iterations on Chang’s set-to-set operator

Let

Let

Let

Think of using pairs

Define the operator

such that

It is possible to establish.

Proposition:

If

Proposition:

Monotonicity of

It can be shown that

This

Further, we can compute

As a very useful by-product, the algorithm that finds the largest fixed

point

49.6. Calculating all Promise-Value Pairs in CE#

Above we have defined the

such that

We noted that the set

Our implementation builds on ideas in this notebook.

To find

It was invented by Judd, Yeltekin, Conklin [Judd et al., 2003].

This algorithm constructs the smallest convex set that contains the

fixed point of the

Given that we are finding the smallest convex set that contains

Let

We approximate

A key feature of this algorithm is that we discretize the action space,

i.e., we create a grid of possible values for

The outer hyperplane approximation algorithm proceeds as follows:

Initialize subgradients,

Given a set of subgradients,

Solve a linear program (described below) for each action in the action space.

Find the maximum and update the corresponding hyperplane level,

If

Step 1 simply creates a large initial set

Given some set

To do this, for each subgradient

subject to

This problem maximizes the hyperplane level for a given set of actions.

The second part of Step 2 then finds the maximum possible hyperplane level across the action space.

The algorithm constructs a sequence of progressively smaller sets

Step 3 ends the algorithm when the difference between these sets is small enough.

We have created a Python class that solves the model assuming the following functional forms:

The remaining parameters

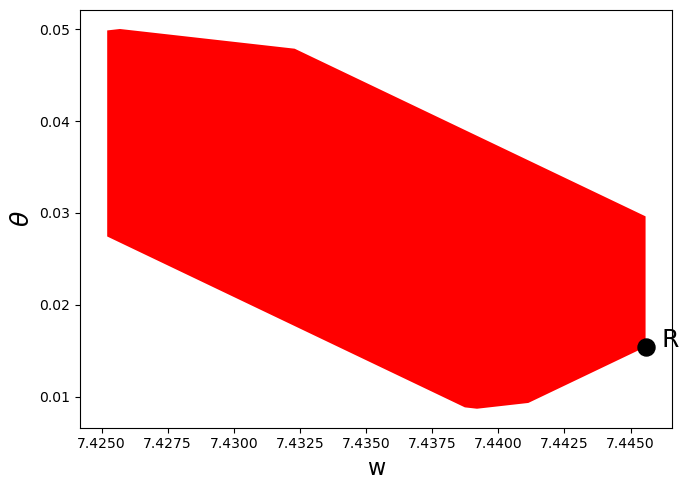

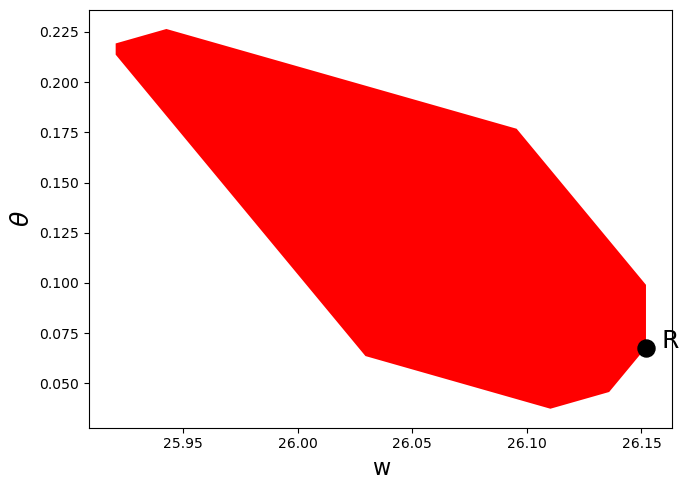

Below we use the class to solve the model and plot the resulting

equilibrium set, once with

(Here we have set the number of subgradients to 10 in order to speed up the code for now - we can increase accuracy by increasing the number of subgradients)

"""

Provides a class called ChangModel to solve different

parameterizations of the Chang (1998) model.

"""

import numpy as np

import time

from scipy.optimize import linprog, minimize

import numpy.polynomial.chebyshev as cheb

class ChangModel:

"""

Class to solve for the competitive and sustainable sets in the Chang (1998)

model, for different parameterizations.

"""

def __init__(self, β, mbar, h_min, h_max, n_h, n_m, N_g):

# Record parameters

self.β, self.mbar, self.h_min, self.h_max = β, mbar, h_min, h_max

self.n_h, self.n_m, self.N_g = n_h, n_m, N_g

# Create other parameters

self.m_min = 1e-9

self.m_max = self.mbar

self.N_a = self.n_h*self.n_m

# Utility and production functions

uc = lambda c: np.log(np.maximum(c, 1e-10)) # Clip to 1e-10 to avoid log(0) or log(-ve)

uc_p = lambda c: 1/c

v = lambda m: 1/500 * (mbar * m - 0.5 * m**2)**0.5

v_p = lambda m: 0.5/500 * (mbar * m - 0.5 * m**2)**(-0.5) * (mbar - m)

u = lambda h, m: uc(f(h, m)) + v(m)

def f(h, m):

x = m * (h - 1)

f = 180 - (0.4 * x)**2

return f

def θ(h, m):

x = m * (h - 1)

θ = uc_p(f(h, m)) * (m + x)

return θ

# Create set of possible action combinations, A

A1 = np.linspace(h_min, h_max, n_h).reshape(n_h, 1)

A2 = np.linspace(self.m_min, self.m_max, n_m).reshape(n_m, 1)

self.A = np.concatenate((np.kron(np.ones((n_m, 1)), A1),

np.kron(A2, np.ones((n_h, 1)))), axis=1)

# Pre-compute utility and output vectors

self.euler_vec = -np.multiply(self.A[:, 1], \

uc_p(f(self.A[:, 0], self.A[:, 1])) - v_p(self.A[:, 1]))

self.u_vec = u(self.A[:, 0], self.A[:, 1])

self.Θ_vec = θ(self.A[:, 0], self.A[:, 1])

self.f_vec = f(self.A[:, 0], self.A[:, 1])

self.bell_vec = np.multiply(uc_p(f(self.A[:, 0],

self.A[:, 1])),

np.multiply(self.A[:, 1],

(self.A[:, 0] - 1))) \

+ np.multiply(self.A[:, 1],

v_p(self.A[:, 1]))

# Find extrema of (w, θ) space for initial guess of equilibrium sets

p_vec = np.zeros(self.N_a)

w_vec = np.zeros(self.N_a)

for i in range(self.N_a):

p_vec[i] = self.Θ_vec[i]

w_vec[i] = self.u_vec[i]/(1 - β)

w_space = np.array([min(w_vec[~np.isinf(w_vec)]),

max(w_vec[~np.isinf(w_vec)])])

p_space = np.array([0, max(p_vec[~np.isinf(w_vec)])])

self.p_space = p_space

# Set up hyperplane levels and gradients for iterations

def SG_H_V(N, w_space, p_space):

"""

This function initializes the subgradients, hyperplane levels,

and extreme points of the value set by choosing an appropriate

origin and radius. It is based on a similar function in QuantEcon's

Games.jl

"""

# First, create a unit circle. Want points placed on [0, 2π]

inc = 2 * np.pi / N

degrees = np.arange(0, 2 * np.pi, inc)

# Points on circle

H = np.zeros((N, 2))

for i in range(N):

x = degrees[i]

H[i, 0] = np.cos(x)

H[i, 1] = np.sin(x)

# Then calculate origin and radius

o = np.array([np.mean(w_space), np.mean(p_space)])

r1 = max((max(w_space) - o[0])**2, (o[0] - min(w_space))**2)

r2 = max((max(p_space) - o[1])**2, (o[1] - min(p_space))**2)

r = np.sqrt(r1 + r2)

# Now calculate vertices

Z = np.zeros((2, N))

for i in range(N):

Z[0, i] = o[0] + r*H.T[0, i]

Z[1, i] = o[1] + r*H.T[1, i]

# Corresponding hyperplane levels

C = np.zeros(N)

for i in range(N):

C[i] = np.dot(Z[:, i], H[i, :])

return C, H, Z

C, self.H, Z = SG_H_V(N_g, w_space, p_space)

C = C.reshape(N_g, 1)

self.c0_c, self.c0_s, self.c1_c, self.c1_s = np.copy(C), np.copy(C), \

np.copy(C), np.copy(C)

self.z0_s, self.z0_c, self.z1_s, self.z1_c = np.copy(Z), np.copy(Z), \

np.copy(Z), np.copy(Z)

self.w_bnds_s, self.w_bnds_c = (w_space[0], w_space[1]), \

(w_space[0], w_space[1])

self.p_bnds_s, self.p_bnds_c = (p_space[0], p_space[1]), \

(p_space[0], p_space[1])

# Create dictionaries to save equilibrium set for each iteration

self.c_dic_s, self.c_dic_c = {}, {}

self.c_dic_s[0], self.c_dic_c[0] = self.c0_s, self.c0_c

def solve_worst_spe(self):

"""

Method to solve for BR(Z). See p.449 of Chang (1998)

"""

p_vec = np.full(self.N_a, np.nan)

c = [1, 0]

# Pre-compute constraints

aineq_mbar = np.vstack((self.H, np.array([0, -self.β])))

bineq_mbar = np.vstack((self.c0_s, 0))

aineq = self.H

bineq = self.c0_s

aeq = [[0, -self.β]]

for j in range(self.N_a):

# Only try if consumption is possible

if self.f_vec[j] > 0:

# If m = mbar, use inequality constraint

if self.A[j, 1] == self.mbar:

bineq_mbar[-1] = self.euler_vec[j]

res = linprog(c, A_ub=aineq_mbar, b_ub=bineq_mbar,

bounds=(self.w_bnds_s, self.p_bnds_s))

else:

beq = self.euler_vec[j]

res = linprog(c, A_ub=aineq, b_ub=bineq, A_eq=aeq, b_eq=beq,

bounds=(self.w_bnds_s, self.p_bnds_s))

if res.status == 0:

p_vec[j] = self.u_vec[j] + self.β * res.x[0]

# Max over h and min over other variables (see Chang (1998) p.449)

self.br_z = np.nanmax(np.nanmin(p_vec.reshape(self.n_m, self.n_h), 0))

def solve_subgradient(self):

"""

Method to solve for E(Z). See p.449 of Chang (1998)

"""

# Pre-compute constraints

aineq_C_mbar = np.vstack((self.H, np.array([0, -self.β])))

bineq_C_mbar = np.vstack((self.c0_c, 0))

aineq_C = self.H

bineq_C = self.c0_c

aeq_C = [[0, -self.β]]

aineq_S_mbar = np.vstack((np.vstack((self.H, np.array([0, -self.β]))),

np.array([-self.β, 0])))

bineq_S_mbar = np.vstack((self.c0_s, np.zeros((2, 1))))

aineq_S = np.vstack((self.H, np.array([-self.β, 0])))

bineq_S = np.vstack((self.c0_s, 0))

aeq_S = [[0, -self.β]]

# Update maximal hyperplane level

for i in range(self.N_g):

c_a1a2_c, t_a1a2_c = np.full(self.N_a, -np.inf), \

np.zeros((self.N_a, 2))

c_a1a2_s, t_a1a2_s = np.full(self.N_a, -np.inf), \

np.zeros((self.N_a, 2))

c = [-self.H[i, 0], -self.H[i, 1]]

for j in range(self.N_a):

# Only try if consumption is possible

if self.f_vec[j] > 0:

# COMPETITIVE EQUILIBRIA

# If m = mbar, use inequality constraint

if self.A[j, 1] == self.mbar:

bineq_C_mbar[-1] = self.euler_vec[j]

res = linprog(c, A_ub=aineq_C_mbar, b_ub=bineq_C_mbar,

bounds=(self.w_bnds_c, self.p_bnds_c))

# If m < mbar, use equality constraint

else:

beq_C = self.euler_vec[j]

res = linprog(c, A_ub=aineq_C, b_ub=bineq_C, A_eq = aeq_C,

b_eq = beq_C, bounds=(self.w_bnds_c, \

self.p_bnds_c))

if res.status == 0:

c_a1a2_c[j] = self.H[i, 0] * (self.u_vec[j] \

+ self.β * res.x[0]) + self.H[i, 1] * self.Θ_vec[j]

t_a1a2_c[j] = res.x

# SUSTAINABLE EQUILIBRIA

# If m = mbar, use inequality constraint

if self.A[j, 1] == self.mbar:

bineq_S_mbar[-2] = self.euler_vec[j]

bineq_S_mbar[-1] = self.u_vec[j] - self.br_z

res = linprog(c, A_ub=aineq_S_mbar, b_ub=bineq_S_mbar,

bounds=(self.w_bnds_s, self.p_bnds_s))

# If m < mbar, use equality constraint

else:

bineq_S[-1] = self.u_vec[j] - self.br_z

beq_S = self.euler_vec[j]

res = linprog(c, A_ub=aineq_S, b_ub=bineq_S, A_eq = aeq_S,

b_eq = beq_S, bounds=(self.w_bnds_s, \

self.p_bnds_s))

if res.status == 0:

c_a1a2_s[j] = self.H[i, 0] * (self.u_vec[j] \

+ self.β*res.x[0]) + self.H[i, 1] * self.Θ_vec[j]

t_a1a2_s[j] = res.x

idx_c = np.where(c_a1a2_c == max(c_a1a2_c))[0][0]

self.z1_c[:, i] = np.array([self.u_vec[idx_c]

+ self.β * t_a1a2_c[idx_c, 0],

self.Θ_vec[idx_c]])

idx_s = np.where(c_a1a2_s == max(c_a1a2_s))[0][0]

self.z1_s[:, i] = np.array([self.u_vec[idx_s]

+ self.β * t_a1a2_s[idx_s, 0],

self.Θ_vec[idx_s]])

for i in range(self.N_g):

self.c1_c[i] = np.dot(self.z1_c[:, i], self.H[i, :])

self.c1_s[i] = np.dot(self.z1_s[:, i], self.H[i, :])

def solve_sustainable(self, tol=1e-5, max_iter=250):

"""

Method to solve for the competitive and sustainable equilibrium sets.

"""

t = time.time()

diff = tol + 1

iters = 0

print('### --------------- ###')

print('Solving Chang Model Using Outer Hyperplane Approximation')

print('### --------------- ### \n')

print('Maximum difference when updating hyperplane levels:')

while diff > tol and iters < max_iter:

iters = iters + 1

self.solve_worst_spe()

self.solve_subgradient()

diff = max(np.maximum(abs(self.c0_c - self.c1_c),

abs(self.c0_s - self.c1_s)))

print(diff)

# Update hyperplane levels

self.c0_c, self.c0_s = np.copy(self.c1_c), np.copy(self.c1_s)

# Update bounds for w and θ

wmin_c, wmax_c = np.min(self.z1_c, axis=1)[0], \

np.max(self.z1_c, axis=1)[0]

pmin_c, pmax_c = np.min(self.z1_c, axis=1)[1], \

np.max(self.z1_c, axis=1)[1]

wmin_s, wmax_s = np.min(self.z1_s, axis=1)[0], \

np.max(self.z1_s, axis=1)[0]

pmin_S, pmax_S = np.min(self.z1_s, axis=1)[1], \

np.max(self.z1_s, axis=1)[1]

self.w_bnds_s, self.w_bnds_c = (wmin_s, wmax_s), (wmin_c, wmax_c)

self.p_bnds_s, self.p_bnds_c = (pmin_S, pmax_S), (pmin_c, pmax_c)

# Save iteration

self.c_dic_c[iters], self.c_dic_s[iters] = np.copy(self.c1_c), \

np.copy(self.c1_s)

self.iters = iters

elapsed = time.time() - t

print('Convergence achieved after {} iterations and {} \

seconds'.format(iters, round(elapsed, 2)))

def solve_bellman(self, θ_min, θ_max, order, disp=False, tol=1e-7, maxiters=100):

"""

Continuous Method to solve the Bellman equation in section 25.3

"""

mbar = self.mbar

# Utility and production functions

uc = lambda c: np.log(np.maximum(c, 1e-10)) # Clip to 1e-10 to avoid log(0) or log(-ve)

uc_p = lambda c: 1 / c

v = lambda m: 1 / 500 * (mbar * m - 0.5 * m**2)**0.5

v_p = lambda m: 0.5/500 * (mbar*m - 0.5 * m**2)**(-0.5) * (mbar - m)

u = lambda h, m: uc(f(h, m)) + v(m)

def f(h, m):

x = m * (h - 1)

f = 180 - (0.4 * x)**2

return f

def θ(h, m):

x = m * (h - 1)

θ = uc_p(f(h, m)) * (m + x)

return θ

# Bounds for Maximization

lb1 = np.array([self.h_min, 0, θ_min])

ub1 = np.array([self.h_max, self.mbar - 1e-5, θ_max])

lb2 = np.array([self.h_min, θ_min])

ub2 = np.array([self.h_max, θ_max])

# Initialize Value Function coefficients

# Calculate roots of Chebyshev polynomial

k = np.linspace(order, 1, order)

roots = np.cos((2 * k - 1) * np.pi / (2 * order))

# Scale to approximation space

s = θ_min + (roots - -1) / 2 * (θ_max - θ_min)

# Create a basis matrix

Φ = cheb.chebvander(roots, order - 1)

c = np.zeros(Φ.shape[0])

# Function to minimize and constraints

def p_fun(x):

scale = -1 + 2 * (x[2] - θ_min)/(θ_max - θ_min)

p_fun = - (u(x[0], x[1]) \

+ self.β * np.dot(cheb.chebvander(scale, order - 1), c))

return p_fun.item()

def p_fun2(x):

scale = -1 + 2*(x[1] - θ_min)/(θ_max - θ_min)

p_fun = - (u(x[0],mbar) \

+ self.β * np.dot(cheb.chebvander(scale, order - 1), c))

return p_fun.item()

cons1 = ({'type': 'eq', 'fun': lambda x: uc_p(f(x[0], x[1])) * x[1]

* (x[0] - 1) + v_p(x[1]) * x[1] + self.β * x[2] - θ},

{'type': 'eq', 'fun': lambda x: uc_p(f(x[0], x[1]))

* x[0] * x[1] - θ})

cons2 = ({'type': 'ineq', 'fun': lambda x: uc_p(f(x[0], mbar)) * mbar

* (x[0] - 1) + v_p(mbar) * mbar + self.β * x[1] - θ},

{'type': 'eq', 'fun': lambda x: uc_p(f(x[0], mbar))

* x[0] * mbar - θ})

bnds1 = np.concatenate([lb1.reshape(3, 1), ub1.reshape(3, 1)], axis=1)

bnds2 = np.concatenate([lb2.reshape(2, 1), ub2.reshape(2, 1)], axis=1)

# Bellman Iterations

diff = 1

iters = 1

while diff > tol:

# 1. Maximization, given value function guess

p_iter1 = np.zeros(order)

for i in range(order):

θ = s[i]

x0 = np.clip(lb1 + (ub1-lb1)/2, lb1, ub1)

res = minimize(p_fun,

x0,

method='SLSQP',

bounds=bnds1,

constraints=cons1,

tol=1e-10)

if res.success == True:

p_iter1[i] = -p_fun(res.x)

x0 = np.clip(lb2 + (ub2-lb2)/2, lb2, ub2)

res = minimize(p_fun2,

x0,

method='SLSQP',

bounds=bnds2,

constraints=cons2,

tol=1e-10)

if -p_fun2(res.x) > p_iter1[i] and res.success == True:

p_iter1[i] = -p_fun2(res.x)

# 2. Bellman updating of Value Function coefficients

c1 = np.linalg.solve(Φ, p_iter1)

# 3. Compute distance and update

diff = np.linalg.norm(c - c1)

if bool(disp == True):

print(diff)

c = np.copy(c1)

iters = iters + 1

if iters > maxiters:

print('Convergence failed after {} iterations'.format(maxiters))

break

self.θ_grid = s

self.p_iter = p_iter1

self.Φ = Φ

self.c = c

print('Convergence achieved after {} iterations'.format(iters))

# Check residuals

θ_grid_fine = np.linspace(θ_min, θ_max, 100)

resid_grid = np.zeros(100)

p_grid = np.zeros(100)

θ_prime_grid = np.zeros(100)

m_grid = np.zeros(100)

h_grid = np.zeros(100)

for i in range(100):

θ = θ_grid_fine[i]

x0 = np.clip(lb1 + (ub1-lb1)/2, lb1, ub1)

res = minimize(p_fun,

x0,

method='SLSQP',

bounds=bnds1,

constraints=cons1,

tol=1e-10)

if res.success == True:

p = -p_fun(res.x)

p_grid[i] = p

θ_prime_grid[i] = res.x[2]

h_grid[i] = res.x[0]

m_grid[i] = res.x[1]

x0 = np.clip(lb2 + (ub2-lb2)/2, lb2, ub2)

res = minimize(p_fun2,

x0,

method='SLSQP',

bounds=bnds2,

constraints=cons2,

tol=1e-10)

if -p_fun2(res.x) > p and res.success == True:

p = -p_fun2(res.x)

p_grid[i] = p

θ_prime_grid[i] = res.x[1]

h_grid[i] = res.x[0]

m_grid[i] = self.mbar

scale = -1 + 2 * (θ - θ_min)/(θ_max - θ_min)

resid_grid_val = np.dot(cheb.chebvander(scale, order-1), c) - p

resid_grid[i] = resid_grid_val.item()

self.resid_grid = resid_grid

self.θ_grid_fine = θ_grid_fine

self.θ_prime_grid = θ_prime_grid

self.m_grid = m_grid

self.h_grid = h_grid

self.p_grid = p_grid

self.x_grid = m_grid * (h_grid - 1)

# Simulate

θ_series = np.zeros(31)

m_series = np.zeros(30)

h_series = np.zeros(30)

# Find initial θ

def ValFun(x):

scale = -1 + 2*(x - θ_min)/(θ_max - θ_min)

p_fun = np.dot(cheb.chebvander(scale, order - 1), c)

return -p_fun

res = minimize(ValFun,

(θ_min + θ_max)/2,

bounds=[(θ_min, θ_max)])

θ_series[0] = res.x.item()

# Simulate

for i in range(30):

θ = θ_series[i]

x0 = np.clip(lb1 + (ub1-lb1)/2, lb1, ub1)

res = minimize(p_fun,

x0,

method='SLSQP',

bounds=bnds1,

constraints=cons1,

tol=1e-10)

if res.success == True:

p = -p_fun(res.x)

h_series[i] = res.x[0]

m_series[i] = res.x[1]

θ_series[i+1] = res.x[2]

x0 = np.clip(lb2 + (ub2-lb2)/2, lb2, ub2)

res2 = minimize(p_fun2,

x0,

method='SLSQP',

bounds=bnds2,

constraints=cons2,

tol=1e-10)

if -p_fun2(res2.x) > p and res2.success == True:

h_series[i] = res2.x[0]

m_series[i] = self.mbar

θ_series[i+1] = res2.x[1]

self.θ_series = θ_series

self.m_series = m_series

self.h_series = h_series

self.x_series = m_series * (h_series - 1)

ch1 = ChangModel(β=0.3, mbar=30, h_min=0.9, h_max=2, n_h=8, n_m=35, N_g=10)

ch1.solve_sustainable()

### --------------- ###

Solving Chang Model Using Outer Hyperplane Approximation

### --------------- ###

Maximum difference when updating hyperplane levels:

[1.9168]

[0.66782]

[0.49235]

[0.32412]

[0.19022]

[0.10863]

[0.05817]

[0.0262]

[0.01836]

[0.01415]

[0.00297]

[0.00089]

[0.00027]

[0.00008]

[0.00002]

[0.00001]

Convergence achieved after 16 iterations and 39.06 seconds

def plot_competitive(ChangModel):

"""

Method that only plots competitive equilibrium set

"""

poly_C = polytope.Polytope(ChangModel.H, ChangModel.c1_c)

ext_C = polytope.extreme(poly_C)

fig, ax = plt.subplots(figsize=(7, 5))

ax.set_xlabel('w', fontsize=16)

ax.set_ylabel(r"$\theta$", fontsize=18)

ax.fill(ext_C[:,0], ext_C[:,1], 'r', zorder=0)

ChangModel.min_theta = min(ext_C[:, 1])

ChangModel.max_theta = max(ext_C[:, 1])

# Add point showing Ramsey Plan

idx_Ramsey = np.where(ext_C[:, 0] == max(ext_C[:, 0]))[0][0]

R = ext_C[idx_Ramsey, :]

ax.scatter(R[0], R[1], 150, 'black', marker='o', zorder=1)

w_min = min(ext_C[:, 0])

# Label Ramsey Plan slightly to the right of the point

ax.annotate("R", xy=(R[0], R[1]), xytext=(R[0] + 0.03 * (R[0] - w_min),

R[1]), fontsize=18)

plt.tight_layout()

plt.show()

plot_competitive(ch1)

ch2 = ChangModel(β=0.8, mbar=30, h_min=0.9, h_max=1/0.8,

n_h=8, n_m=35, N_g=10)

ch2.solve_sustainable()

### --------------- ###

Solving Chang Model Using Outer Hyperplane Approximation

### --------------- ###

Maximum difference when updating hyperplane levels:

[0.06369]

[0.02476]

[0.02153]

[0.01915]

[0.01795]

[0.01642]

[0.01507]

[0.01284]

[0.01106]

[0.00694]

[0.0085]

[0.00781]

[0.00433]

[0.00492]

[0.00303]

[0.00182]

[0.00638]

[0.00116]

[0.00093]

[0.00075]

[0.0006]

[0.00494]

[0.00038]

[0.00121]

[0.00024]

[0.0002]

[0.00016]

[0.00013]

[0.0001]

[0.00008]

[0.00006]

[0.00005]

[0.00004]

[0.00003]

[0.00003]

[0.00002]

[0.00002]

[0.00001]

[0.00001]

[0.00001]

Convergence achieved after 40 iterations and 113.42 seconds

plot_competitive(ch2)

49.7. Solving a Continuation Ramsey Planner’s Bellman Equation#

In this section we solve the Bellman equation confronting a continuation Ramsey planner.

The construction of a Ramsey plan is decomposed into a two subproblems in Ramsey plans, time inconsistency, sustainable plans and dynamic Stackelberg problems.

Subproblem 1 is faced by a sequence of continuation Ramsey planners at

Subproblem 2 is faced by a Ramsey planner at

The problem is:

subject to:

To solve this Bellman equation, we must know the

set

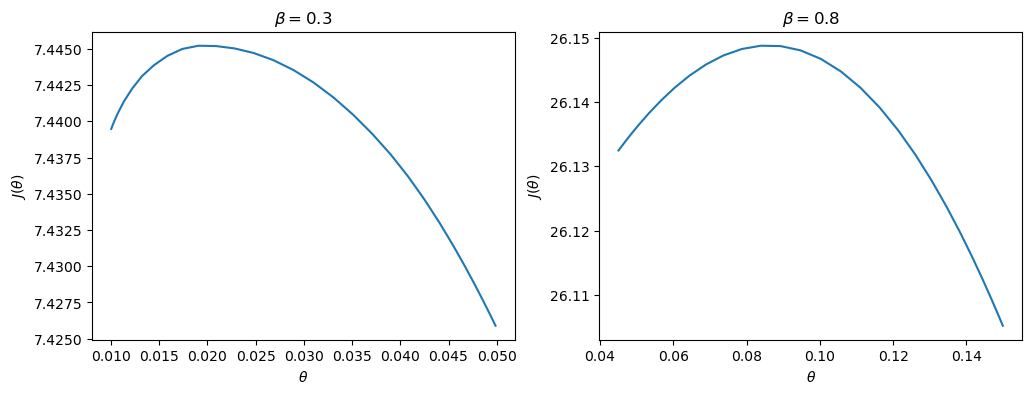

We have solved the Bellman equation for the two sets of parameter values for which we computed the equilibrium value sets above.

Hence for these parameter configurations, we know the bounds of

The two sets of parameters

differ only in the level of

From the figures earlier in this lecture, we know that when

ch1 = ChangModel(β=0.3, mbar=30, h_min=0.99, h_max=1/0.3,

n_h=8, n_m=35, N_g=50)

ch2 = ChangModel(β=0.8, mbar=30, h_min=0.1, h_max=1/0.8,

n_h=20, n_m=50, N_g=50)

ch1.solve_bellman(θ_min=0.01, θ_max=0.0499, order=30, tol=1e-6)

ch2.solve_bellman(θ_min=0.045, θ_max=0.15, order=30, tol=1e-6)

Convergence achieved after 15 iterations

/home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages/scipy/optimize/_slsqp_py.py:437: RuntimeWarning: Values in x were outside bounds during a minimize step, clipping to bounds

fx = wrapped_fun(x)

/home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages/scipy/optimize/_slsqp_py.py:441: RuntimeWarning: Values in x were outside bounds during a minimize step, clipping to bounds

g = append(wrapped_grad(x), 0.0)

/home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages/scipy/optimize/_slsqp_py.py:495: RuntimeWarning: Values in x were outside bounds during a minimize step, clipping to bounds

a_eq = vstack([con['jac'](x, *con['args'])

/home/runner/miniconda3/envs/quantecon/lib/python3.12/site-packages/scipy/optimize/_slsqp_py.py:501: RuntimeWarning: Values in x were outside bounds during a minimize step, clipping to bounds

a_ieq = vstack([con['jac'](x, *con['args'])

Convergence achieved after 72 iterations

First, a quick check that our approximations of the value functions are good.

We do this by calculating the residuals between iterates on the value function on a fine grid:

max(abs(ch1.resid_grid)), max(abs(ch2.resid_grid))

(6.46313155971967e-06, 6.875358415925348e-07)

The value functions plotted below trace out the right edges of the sets of equilibrium values plotted above

fig, axes = plt.subplots(1, 2, figsize=(12, 4))

for ax, model in zip(axes, (ch1, ch2)):

ax.plot(model.θ_grid, model.p_iter)

ax.set(xlabel=r"$\theta$",

ylabel=r"$J(\theta)$",

title=rf"$\beta = {model.β}$")

plt.show()

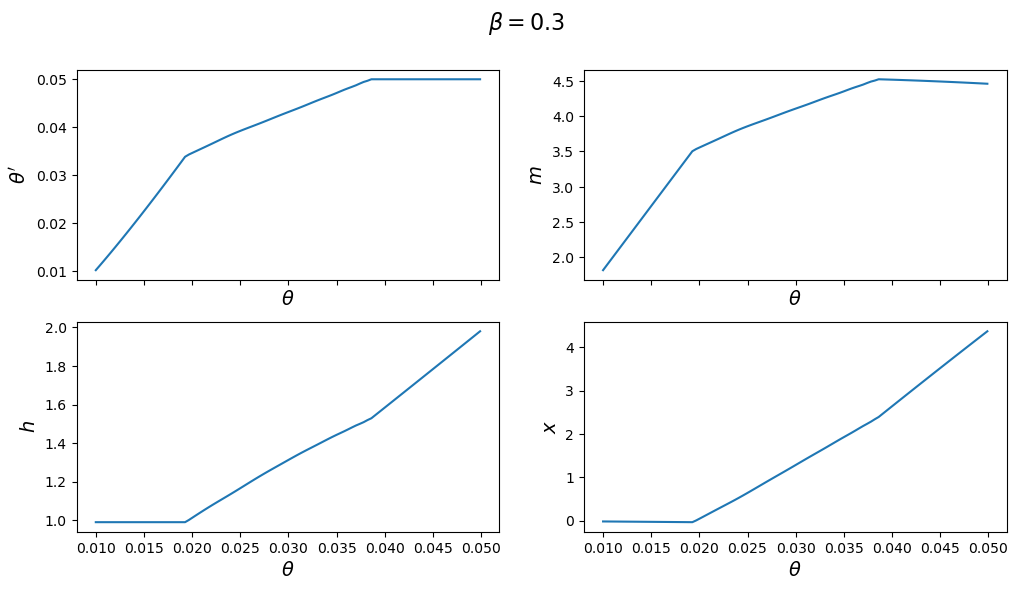

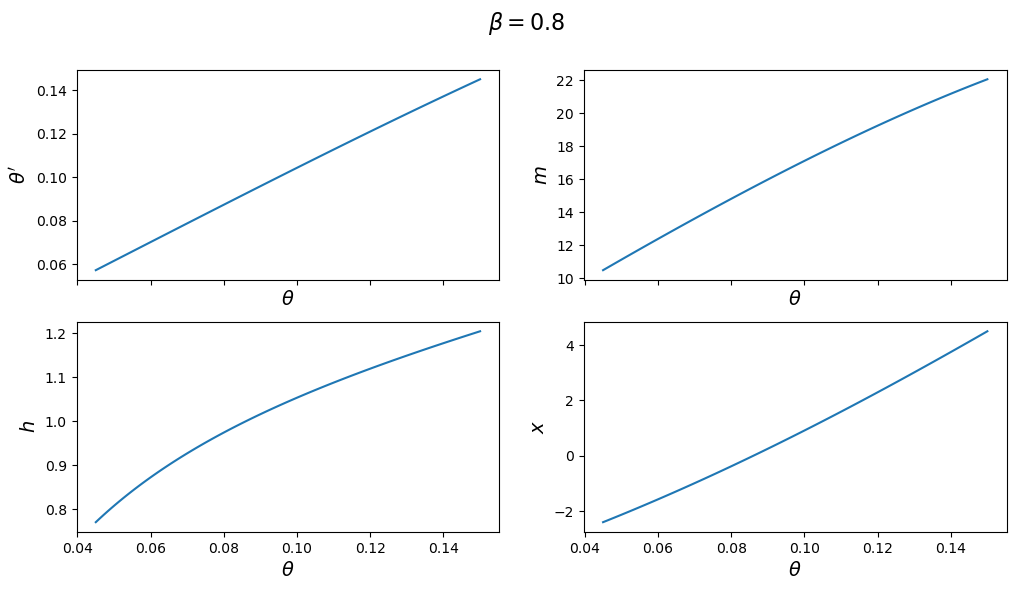

The next figure plots the optimal policy functions; values of

for model in (ch1, ch2):

fig, axes = plt.subplots(2, 2, figsize=(12, 6), sharex=True)

fig.suptitle(rf"$\beta = {model.β}$", fontsize=16)

plots = [model.θ_prime_grid, model.m_grid,

model.h_grid, model.x_grid]

labels = [r"$\theta'$", "$m$", "$h$", "$x$"]

for ax, plot, label in zip(axes.flatten(), plots, labels):

ax.plot(model.θ_grid_fine, plot)

ax.set_xlabel(r"$\theta$", fontsize=14)

ax.set_ylabel(label, fontsize=14)

plt.show()

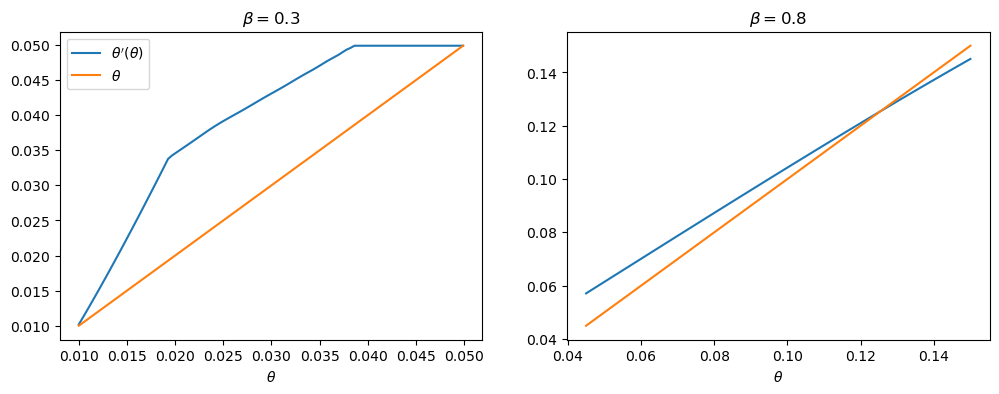

With the first set of parameter values, the value of

But with the second set of parameters it converges to a value in the interior of the set.

Consequently, the choice of

One way of seeing this is plotting

With the first set of parameter values, this function does not intersect the

45-degree line until

fig, axes = plt.subplots(1, 2, figsize=(12, 4))

for ax, model in zip(axes, (ch1, ch2)):

ax.plot(model.θ_grid_fine, model.θ_prime_grid, label=r"$\theta'(\theta)$")

ax.plot(model.θ_grid_fine, model.θ_grid_fine, label=r"$\theta$")

ax.set(xlabel=r"$\theta$", title=rf"$\beta = {model.β}$")

axes[0].legend()

plt.show()

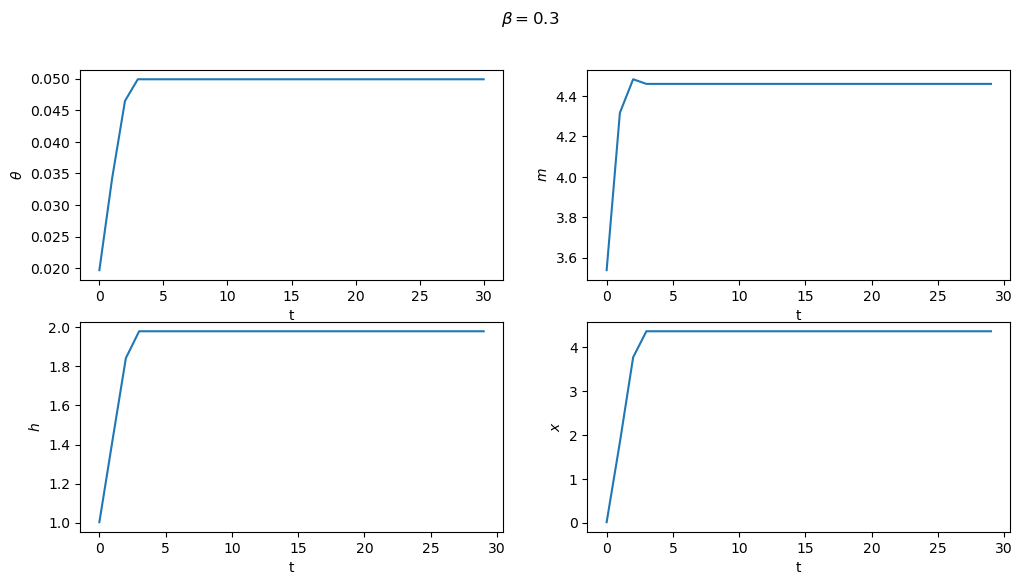

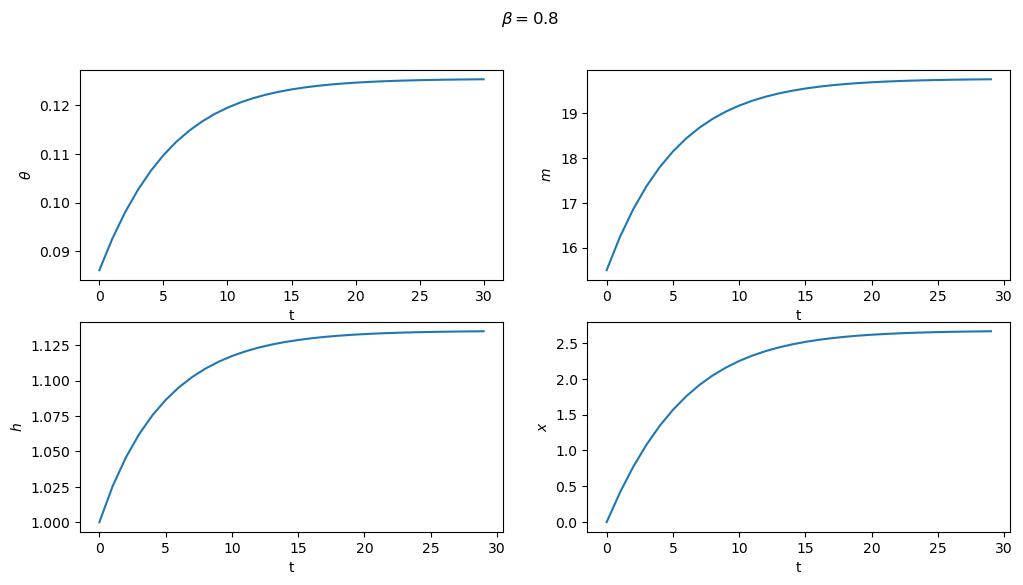

Subproblem 2 is equivalent to the planner choosing the initial value of

From this starting point, we can then trace out the paths for

These are shown below for both sets of parameters

for model in (ch1, ch2):

fig, axes = plt.subplots(2, 2, figsize=(12, 6))

fig.suptitle(rf"$\beta = {model.β}$")

plots = [model.θ_series, model.m_series, model.h_series, model.x_series]

labels = [r"$\theta$", "$m$", "$h$", "$x$"]

for ax, plot, label in zip(axes.flatten(), plots, labels):

ax.plot(plot)

ax.set(xlabel='t', ylabel=label)

plt.show()

49.7.1. Next Steps#

In Credible Government Policies in Chang Model we shall find

a subset of competitive equilibria that are sustainable

in the sense that a sequence of government administrations that chooses

sequentially, rather than once and for all at time

In the process of constructing them, we shall construct another, smaller set of competitive equilibria.